Intro to Neural Networks

Outline:

The simplest neural network

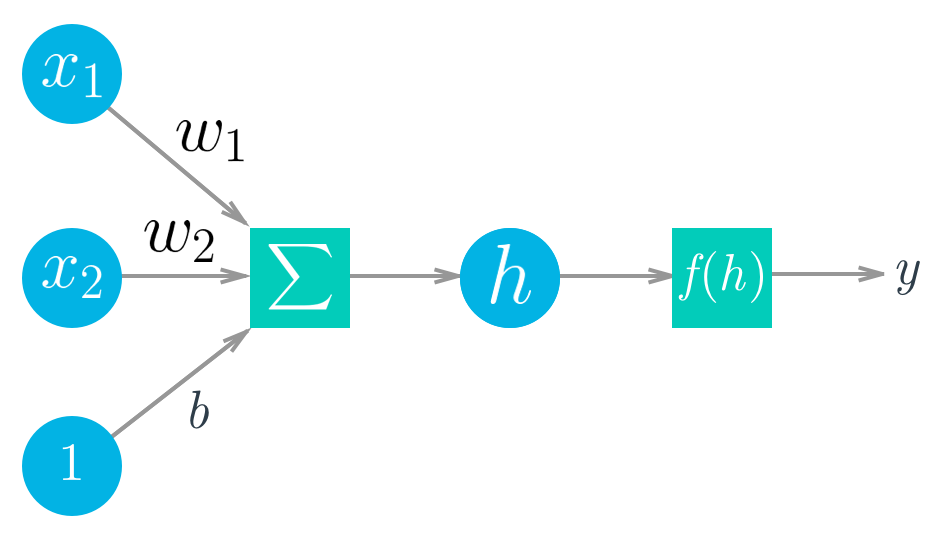

Diagram of a simple neural network. Circles are units, boxes are operations

Diagram of a simple neural network. Circles are units, boxes are operations

- The activation function, can be any function, not just the step function shown earlier.

- Other activation functions are the logistic (often called the sigmoid), tanh, and softmax functions.

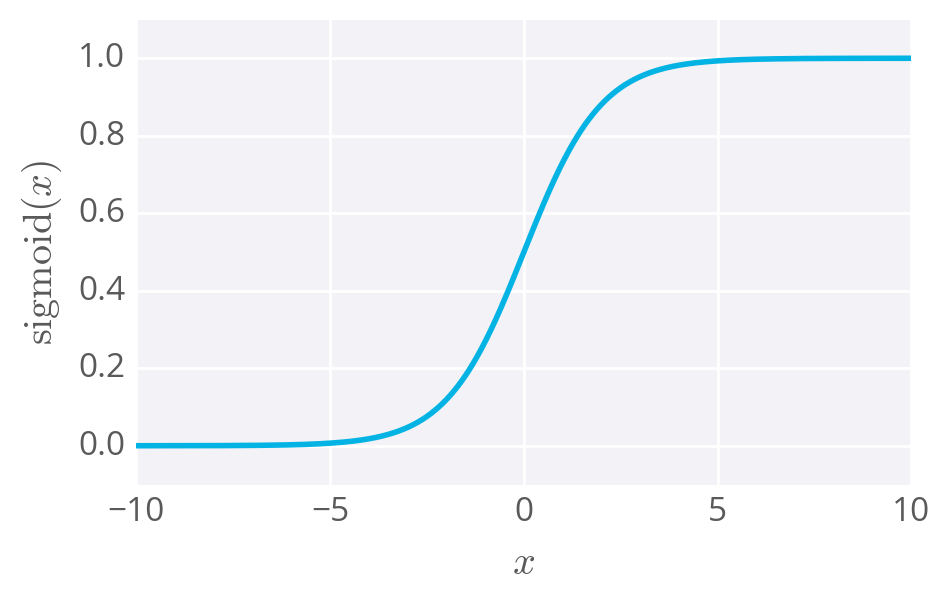

Sigmoid function

The Sigmoid Function

The Sigmoid Function

- The sigmoid function is bounded between 0 and 1

- An output can be interpreted as a probability for success.

- It turns out, again, using a sigmoid as the activation function results in the same formulation as logistic regression.

Simple network implementation

The output of the network is

import numpy as np

def sigmoid(x):

# Implement sigmoid function

return 1/(1 + np.exp(-x))

inputs = np.array([0.7, -0.3])

weights = np.array([0.1, 0.8])

bias = -0.1

# Calculate the output

output = sigmoid(np.dot(weights, inputs) + bias)

print('Output:')

print(output) # 0.432907095035