Recurrent Neural Network

Outline:

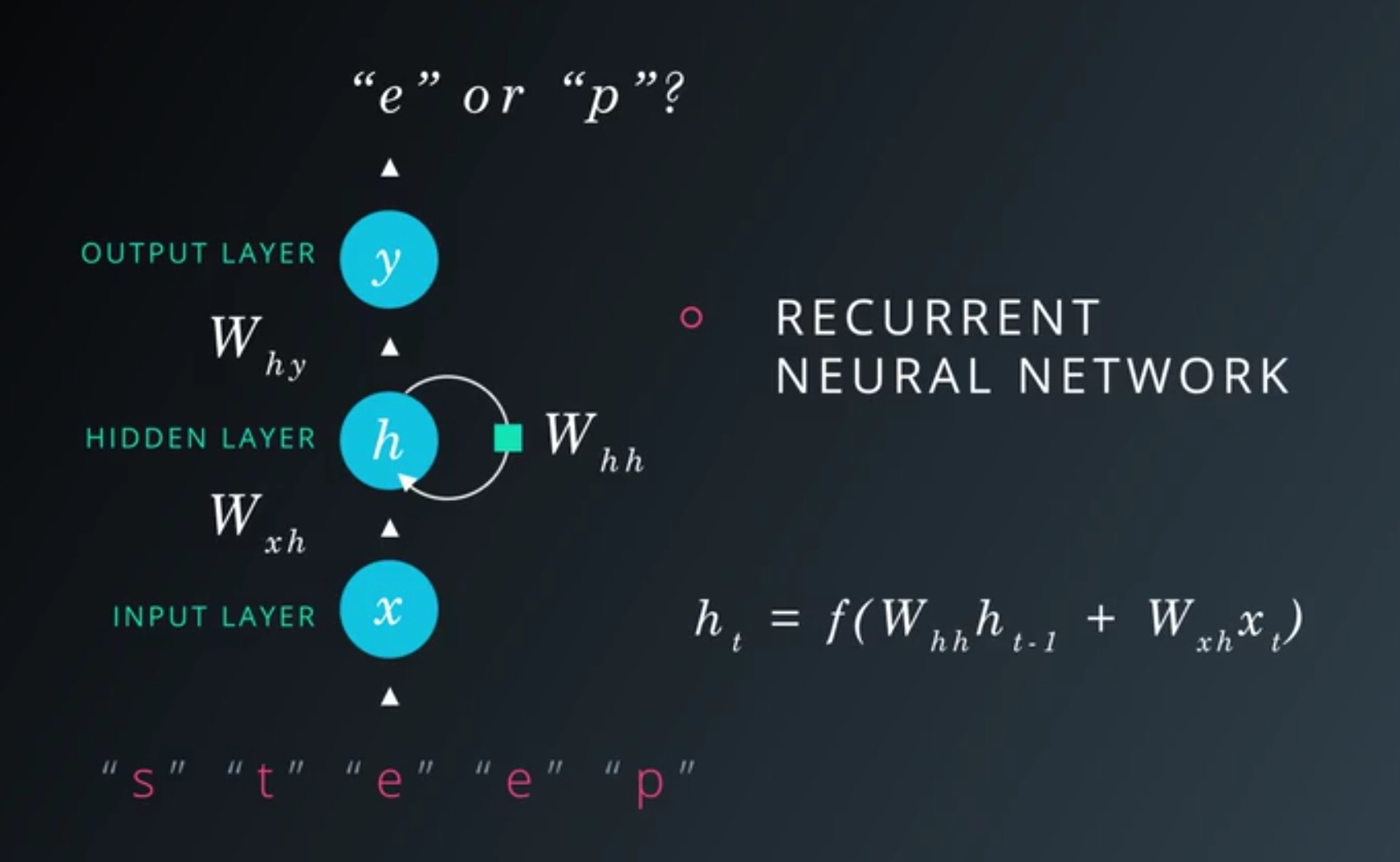

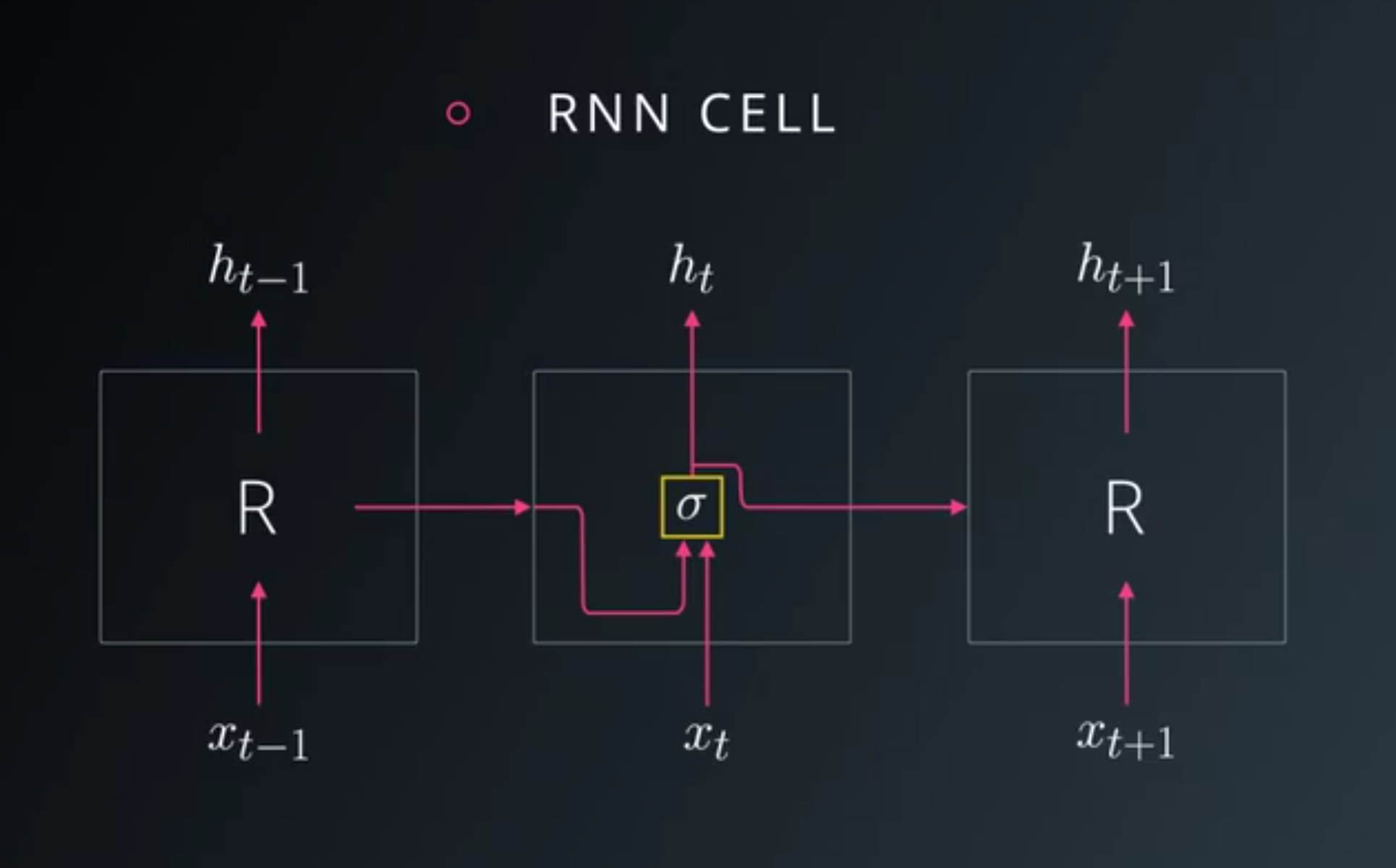

Intro to RNN

- In feed forward networks, there is no sense of order in inputs.

- Idea is that, build order in network. (include information about order)

- split data into parts (text -> words)

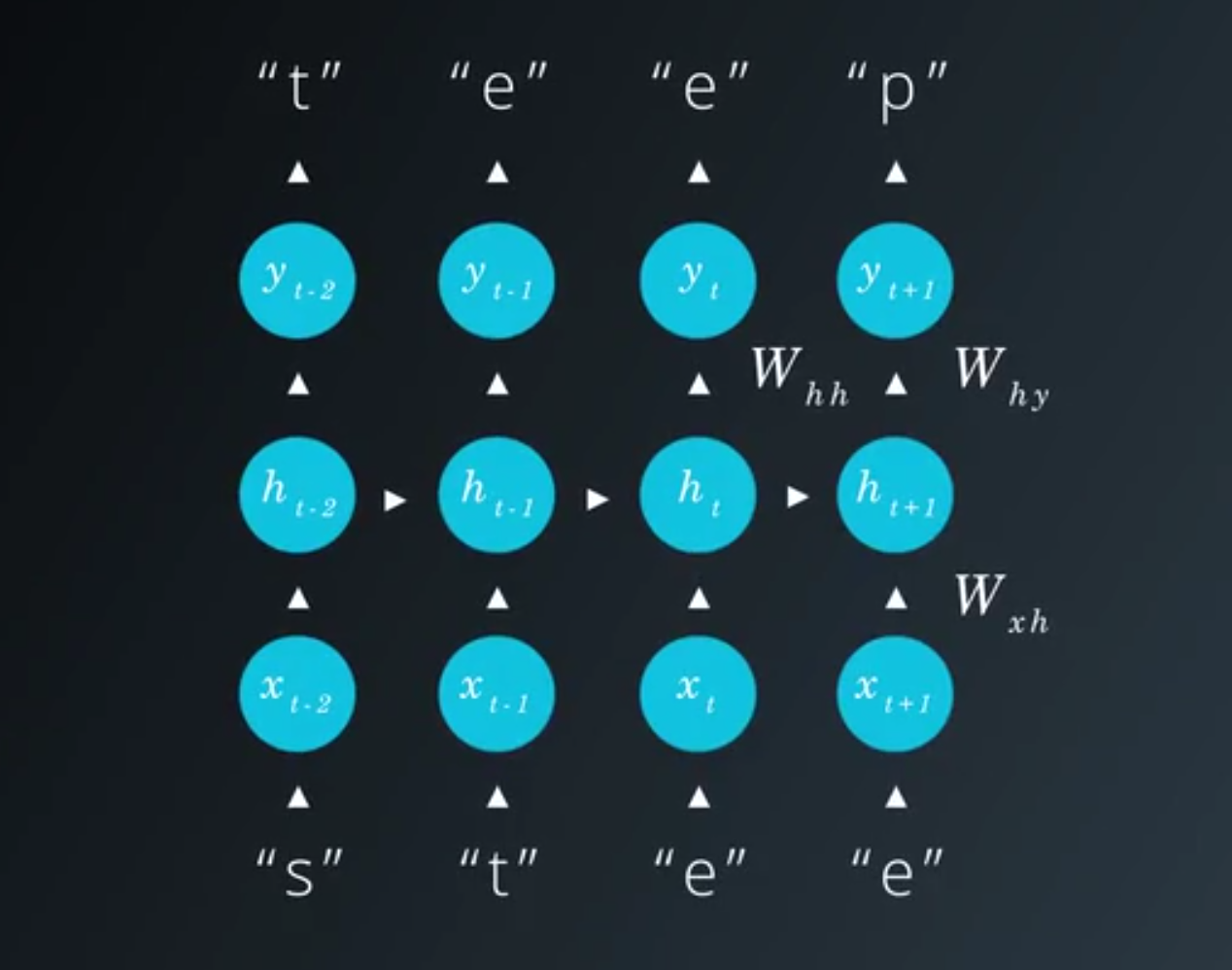

- routing the hidden layer output from the previous step back into hidden layer

- This architecture colled Recurrent Neural Network(RNN).

- total input of hidden layer is sum of the combinations from input layer and previous hidden layer.

- total input of hidden layer is sum of the combinations from input layer and previous hidden layer.

- Example

- word -> characters. (steep -> ‘s’, ‘t’, ‘e’, ‘e’, ‘p’)

- word -> characters. (steep -> ‘s’, ‘t’, ‘e’, ‘e’, ‘p’)

LSTM

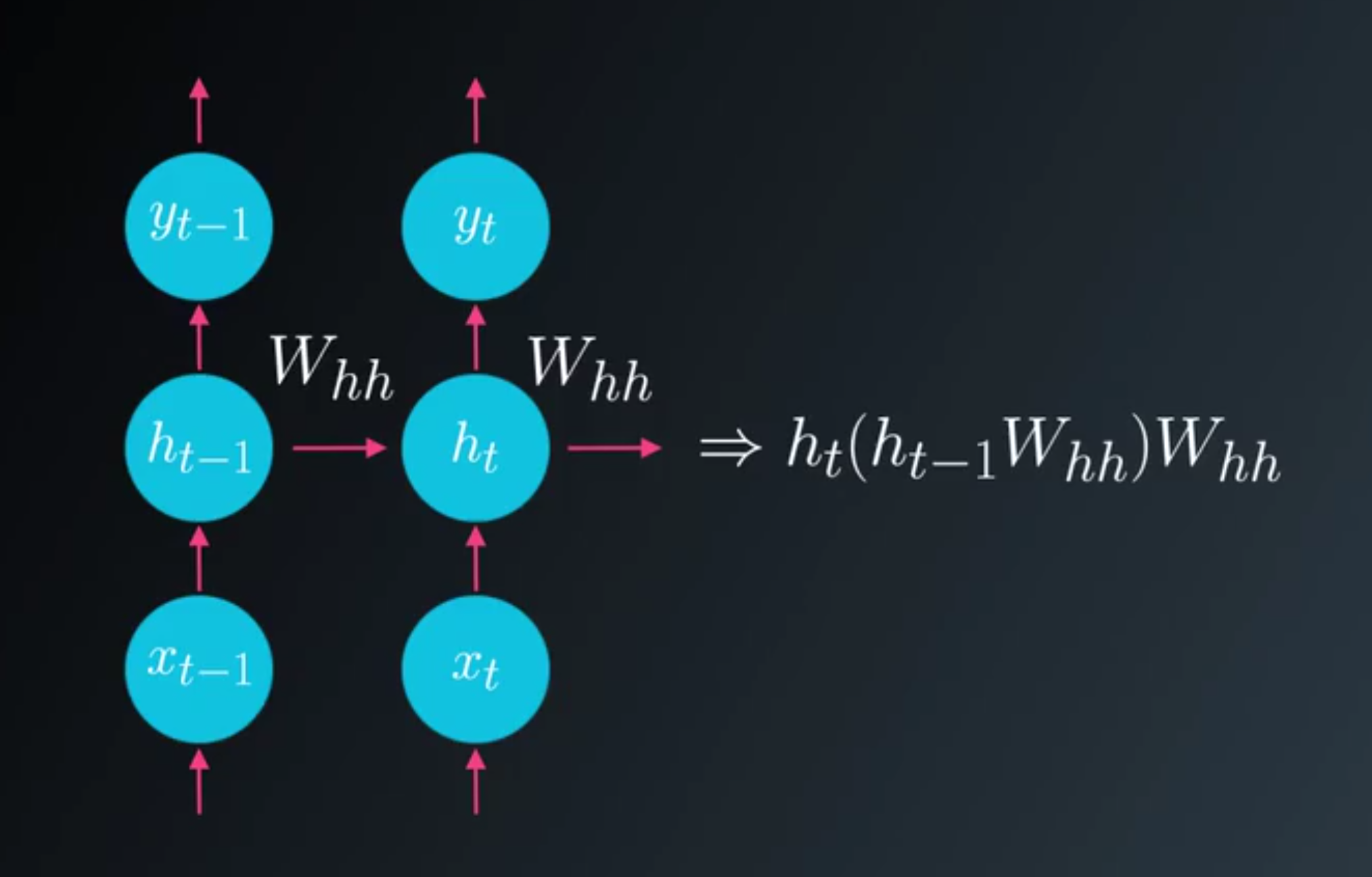

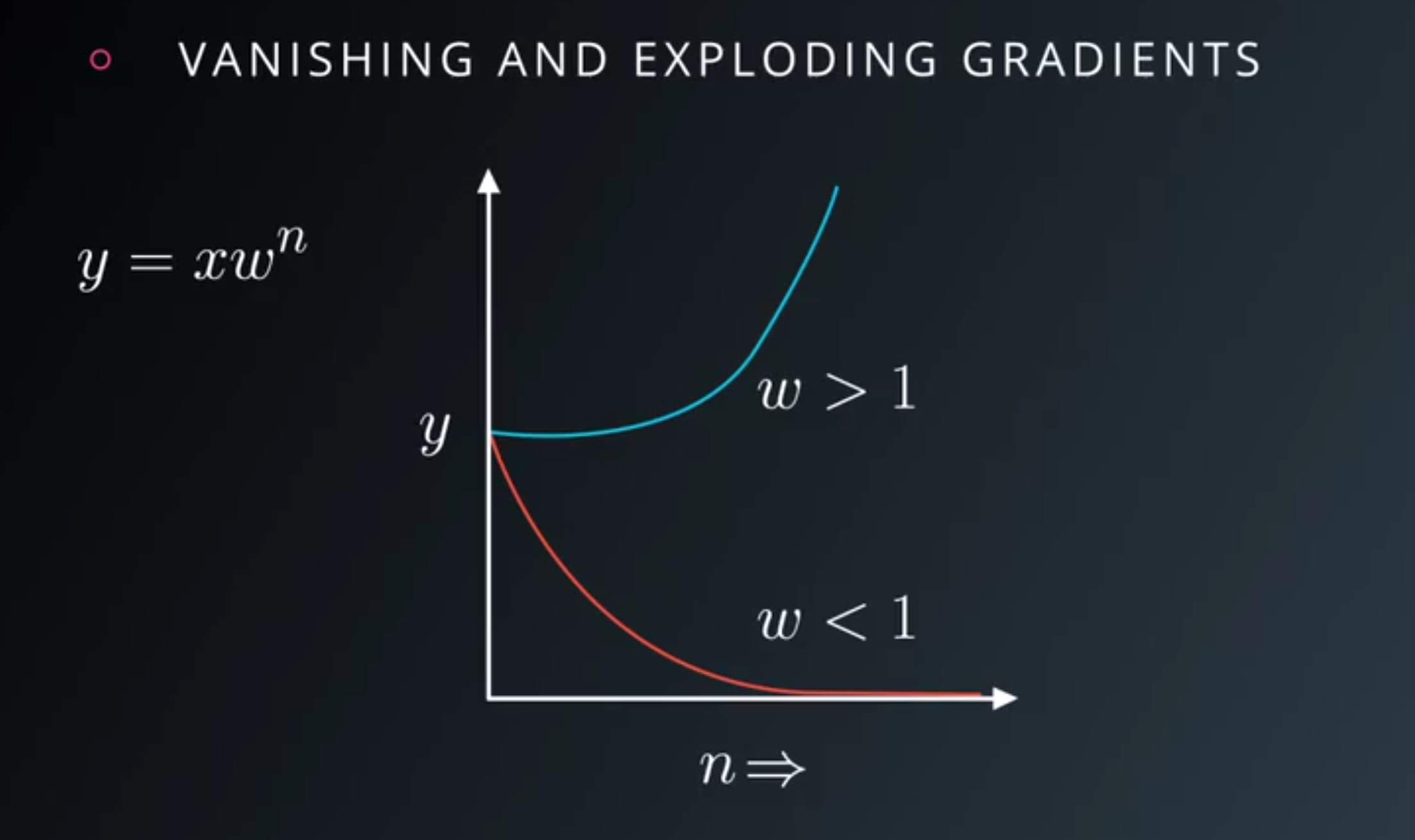

- In RNN, hidden layer multiplication leads to problem, gradient going to

- really small and vanish

- really large and explode

- We can think of RNN as

- bunch of cells with inputs and outputs

- inside the cells there are network layers

- To solve the problem of vanishing gradients

- Use more complicated cells, called

LSTM(Long Short Term Memory)

- Use more complicated cells, called

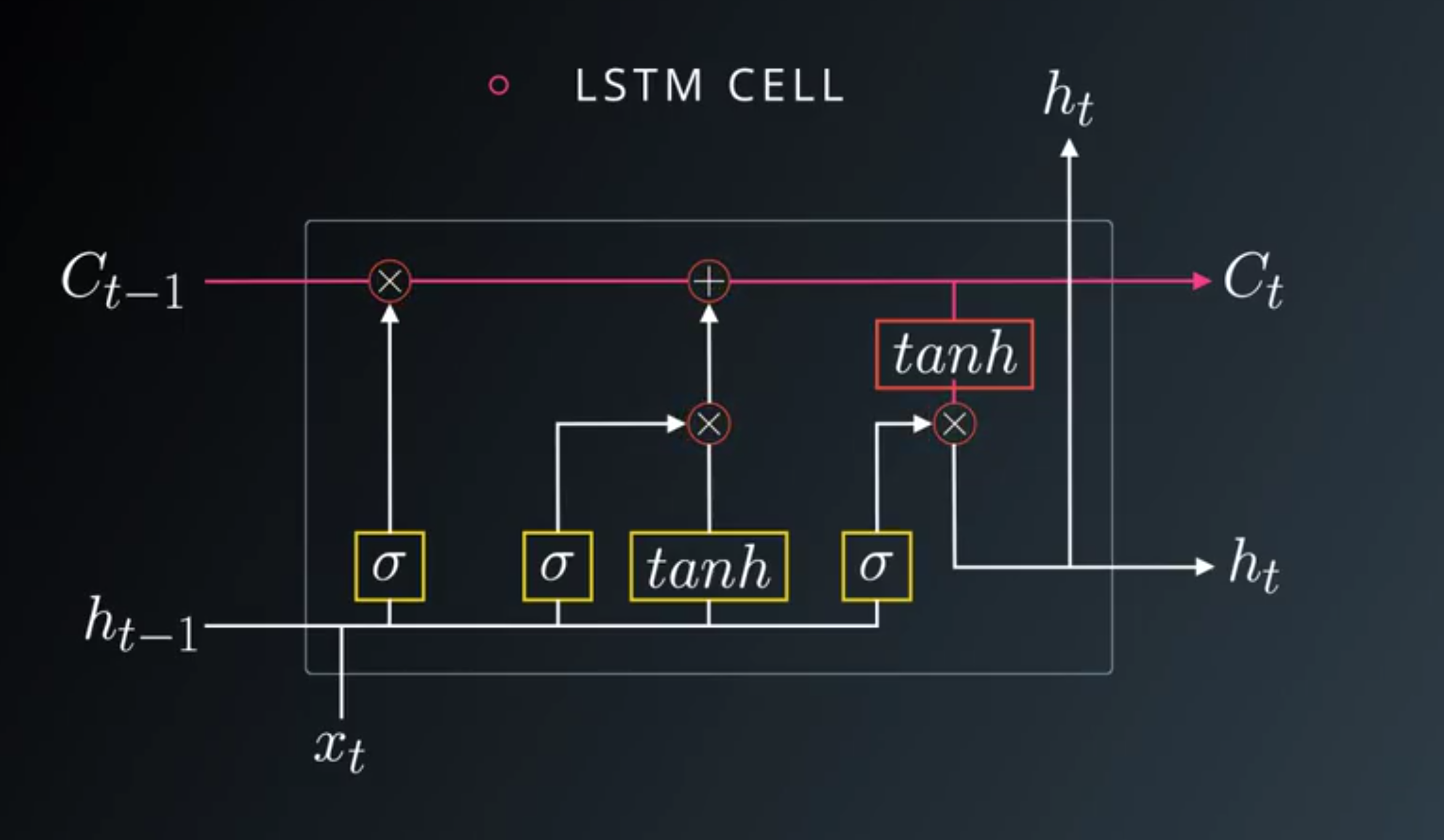

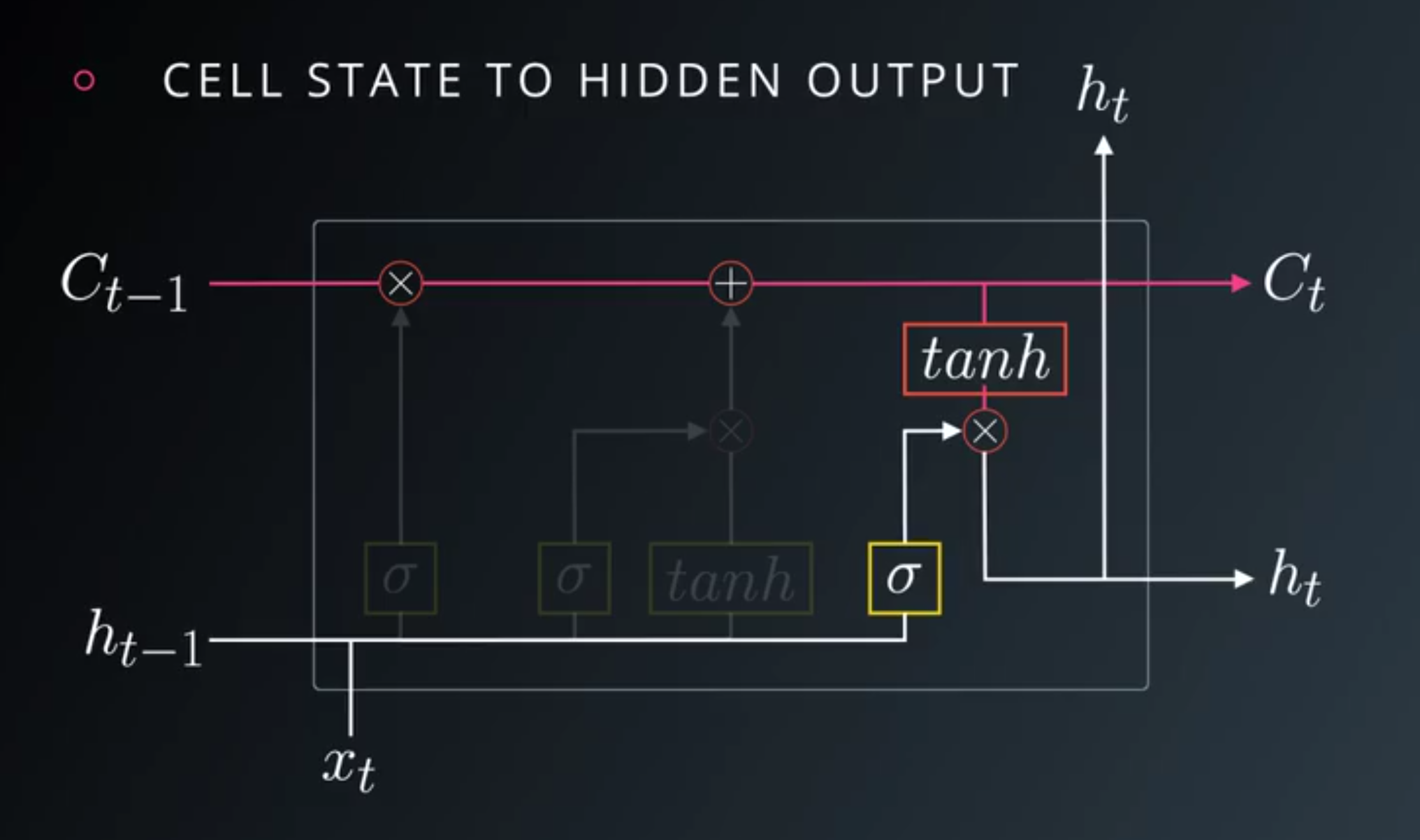

LSTM cell

- 4 network layers as yellow boxes

- each of them with their own weights

- is sigmoid

- is hyperbolic tangent function

- similar to sigmoid that squashes inputs

- output is

- Red circles are point-wise and element-wise operations

- Cell state, labeled as

C- Goes through LSTM cell with little interaction

- allowing information to flow easily through the cells.

- Modified only element-wise operations which function as gates

- Hidden state is calculated from the cell state

- Goes through LSTM cell with little interaction

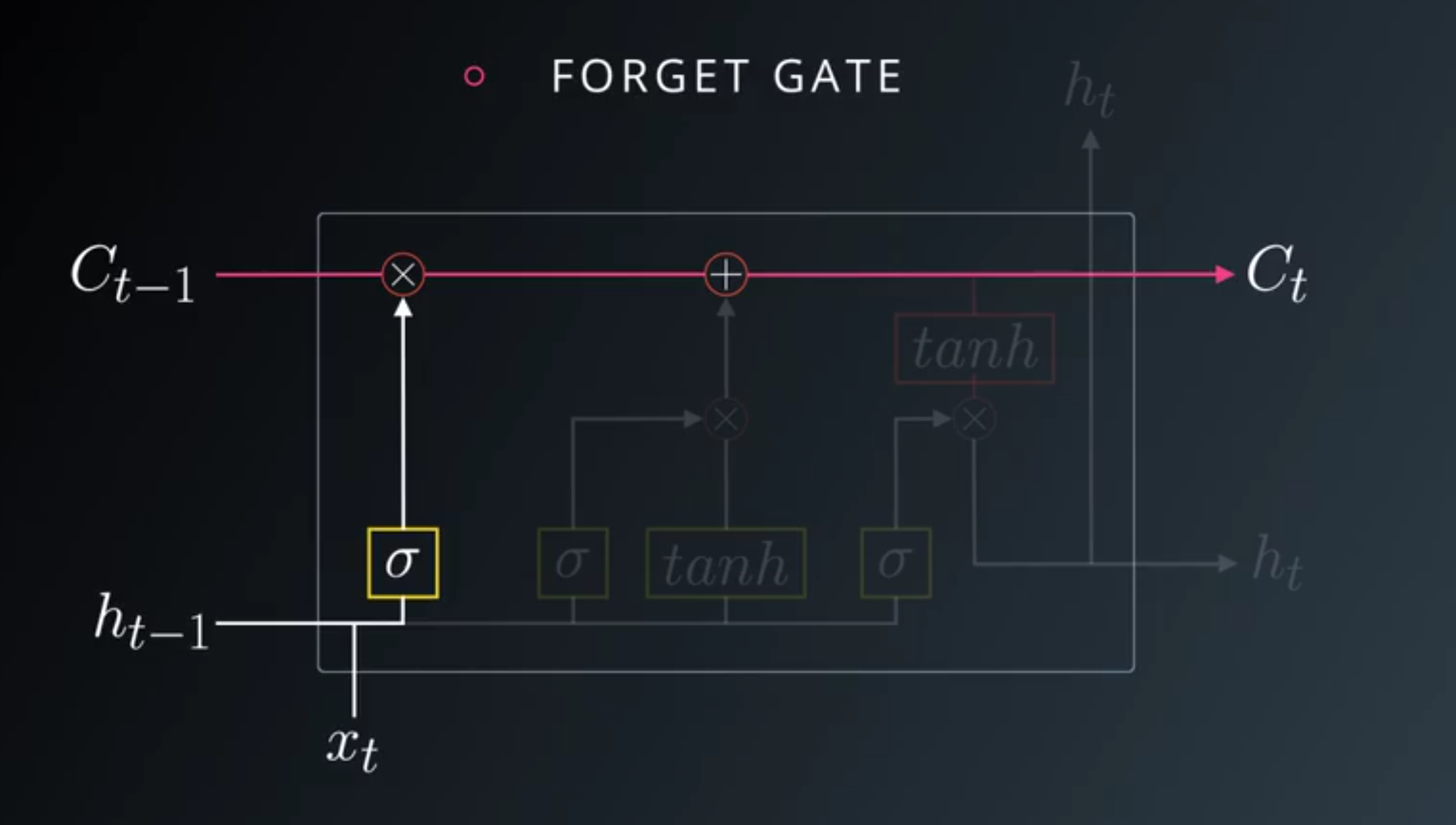

- Forget Gate

- Network can learn to forget information that causes incorrect predictions. (output: 0)

- Long range of information that is helpful. (output: 1)

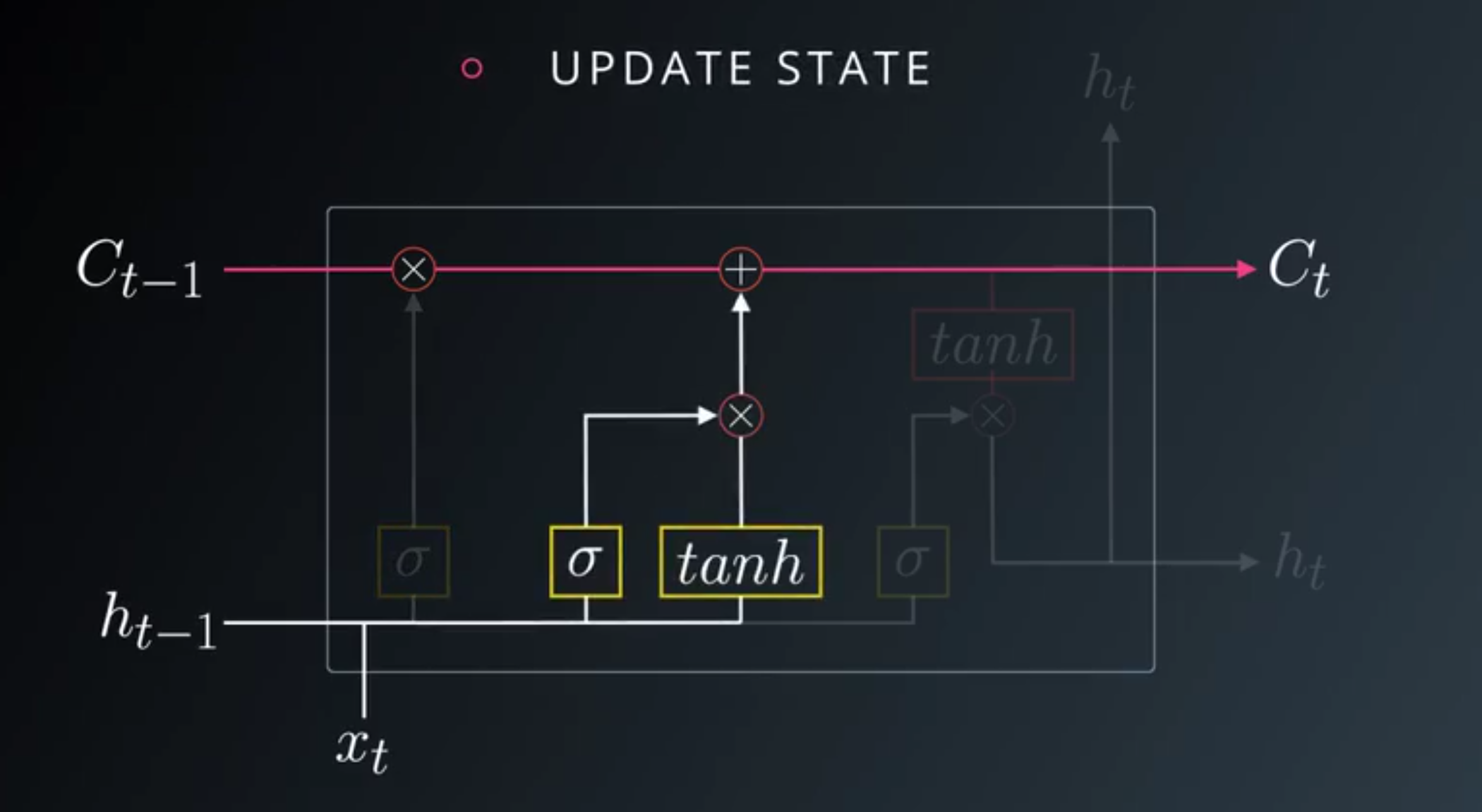

- Update State

- this gate updates the cell from the input and previous hidden state

- this gate updates the cell from the input and previous hidden state

- Cell State to Hidden Output

- cell state is used to produce the hidden state, to next hidden cell.

- Sigmoid gates let the network learn which information to keep and rid of.

Character-wise RNN

Resources

Here are a few great resources for you to learn more about recurrent neural networks. We’ll also continue to cover RNNs over the coming weeks.

- Andrej Karpathy’s lecture on RNNs and LSTMs from CS231n

- A great blog post by Christopher Olah on how LSTMs work.

- Building an RNN from the ground up, this is a little more advanced, but has an implementation in TensorFlow.

- Understanding LSTM Networks

- LSTM Networks for Sentiment Analysis

- A Beginner’s Guide to Recurrent Networks and LSTMs

- TensorFlow’s Recurrent Neural Network Tutorial

- Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras

- Demystifying LSTM neural networks