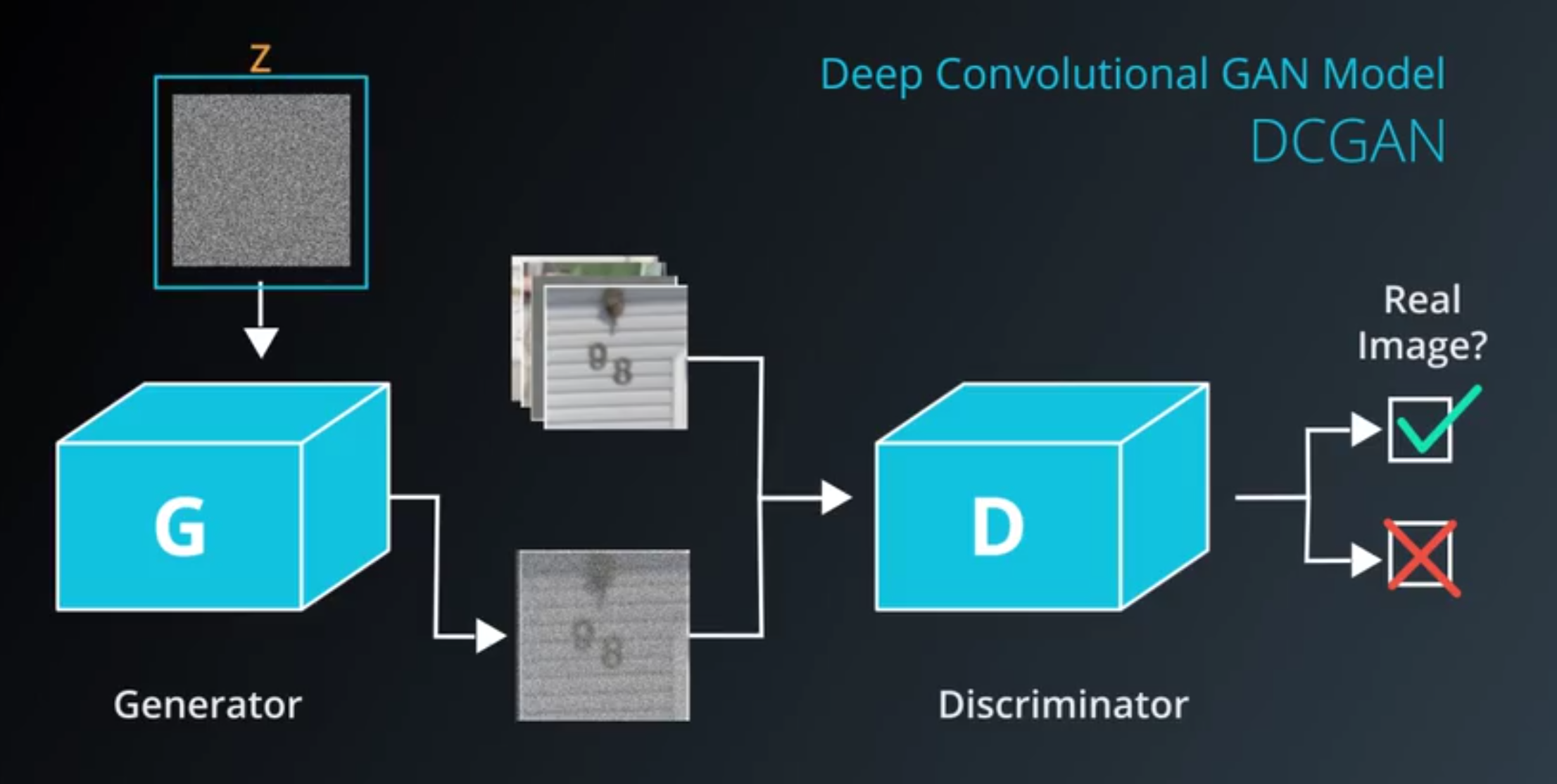

Deep Convolutional GANs

Outline:

DCGAN Architecture

- DCGAN

- Use deep convolutional networks for the generator and the discriminator.

- Otherwise it’s the same as GAN

- The generator is trying to fool the discriminator with fake images

- That means we need to upsample the input vector to make a layer with the same shape as the real images.

- In an auto-encoder, where we re-sized a layer with nearest neighbor interpolation

- For this GAN model, to use transposed convolutions

- The discriminator is trying to properly classify images as real or fake.

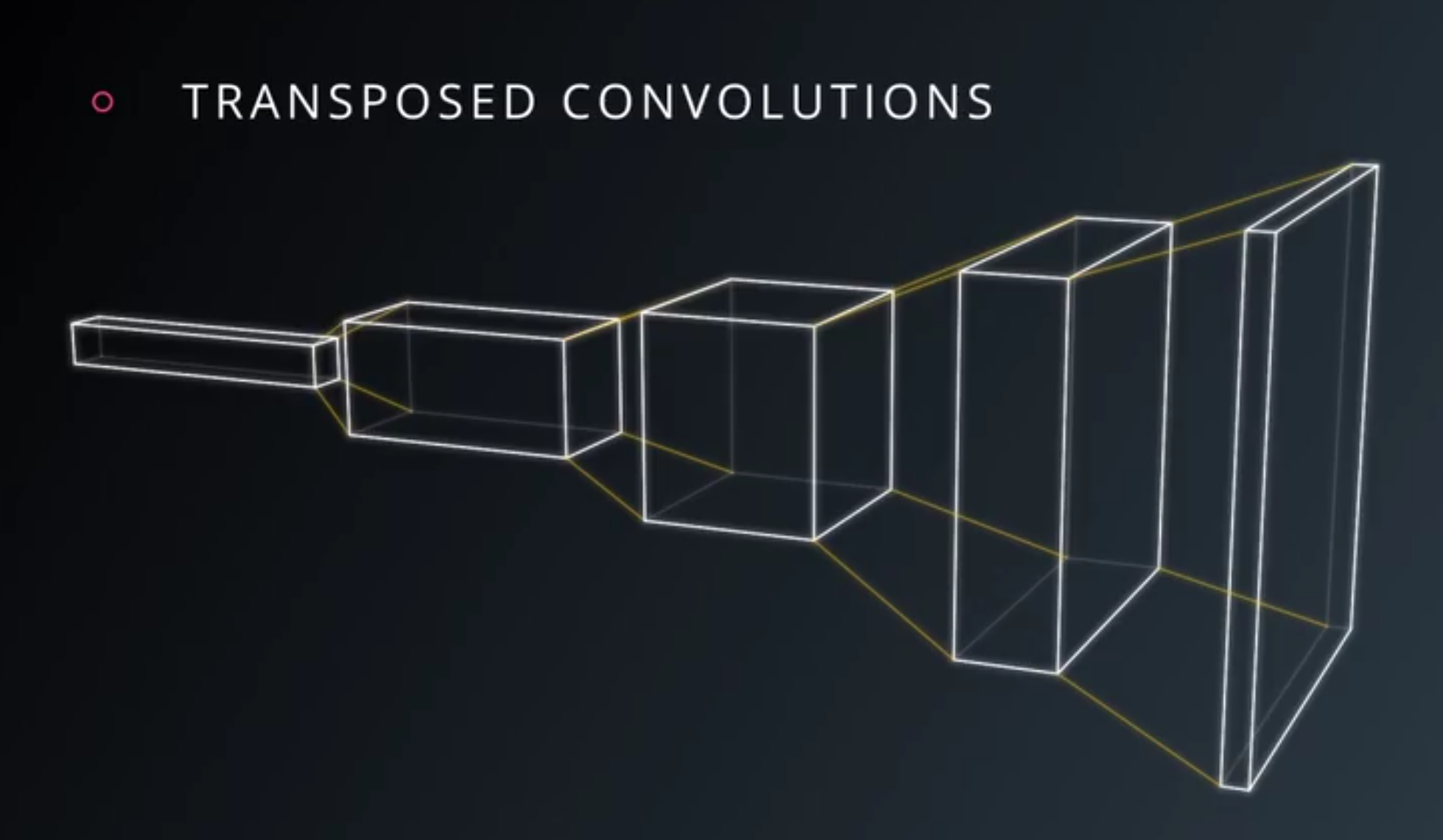

- Transposed convolutions

- similar to the convolutions, but flipped

- go from narrow and deep to wide and flat.

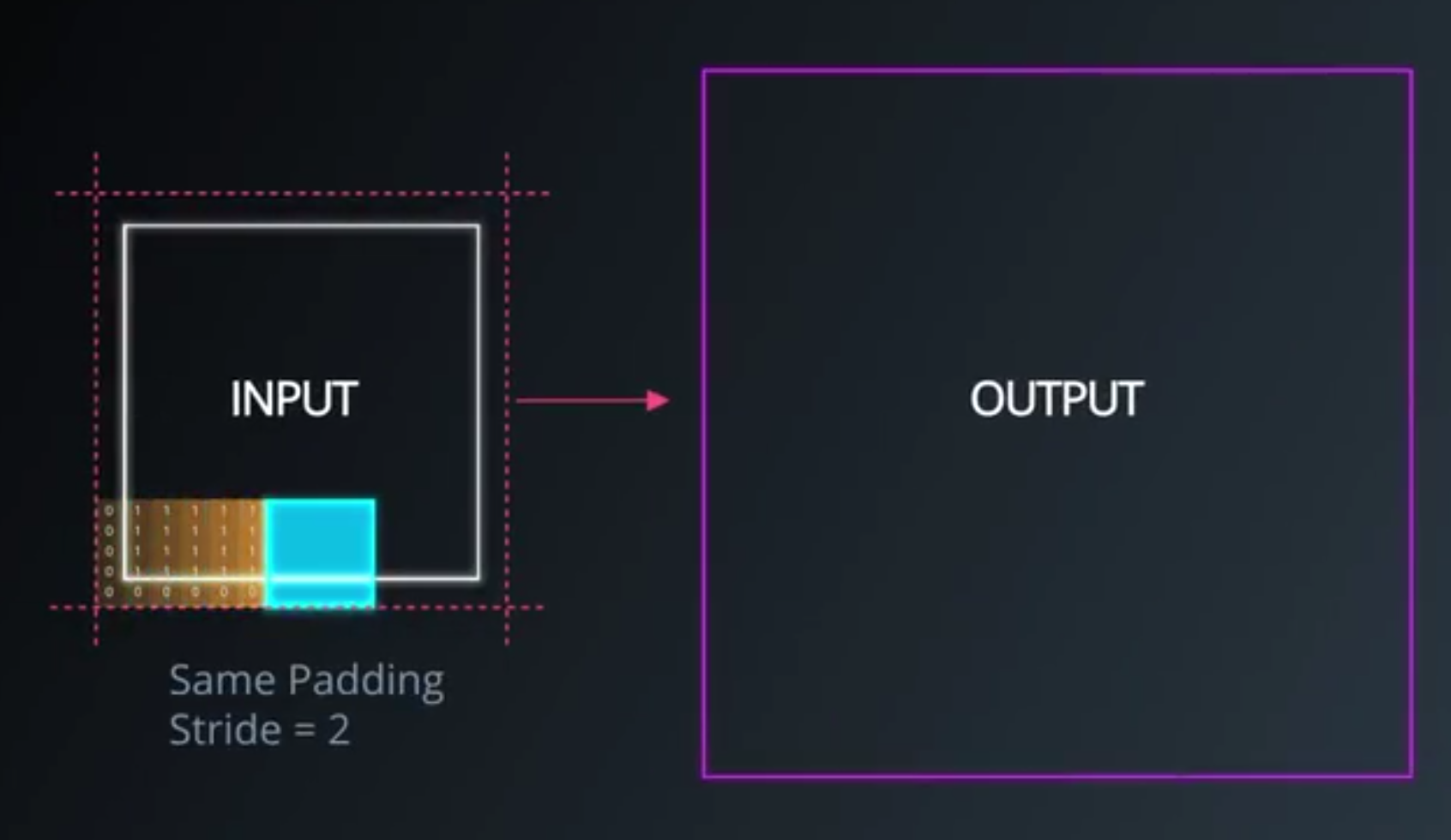

- When you move the kernel 1 pixel in the input layer, the patch moves 2 pixels and the output layer.

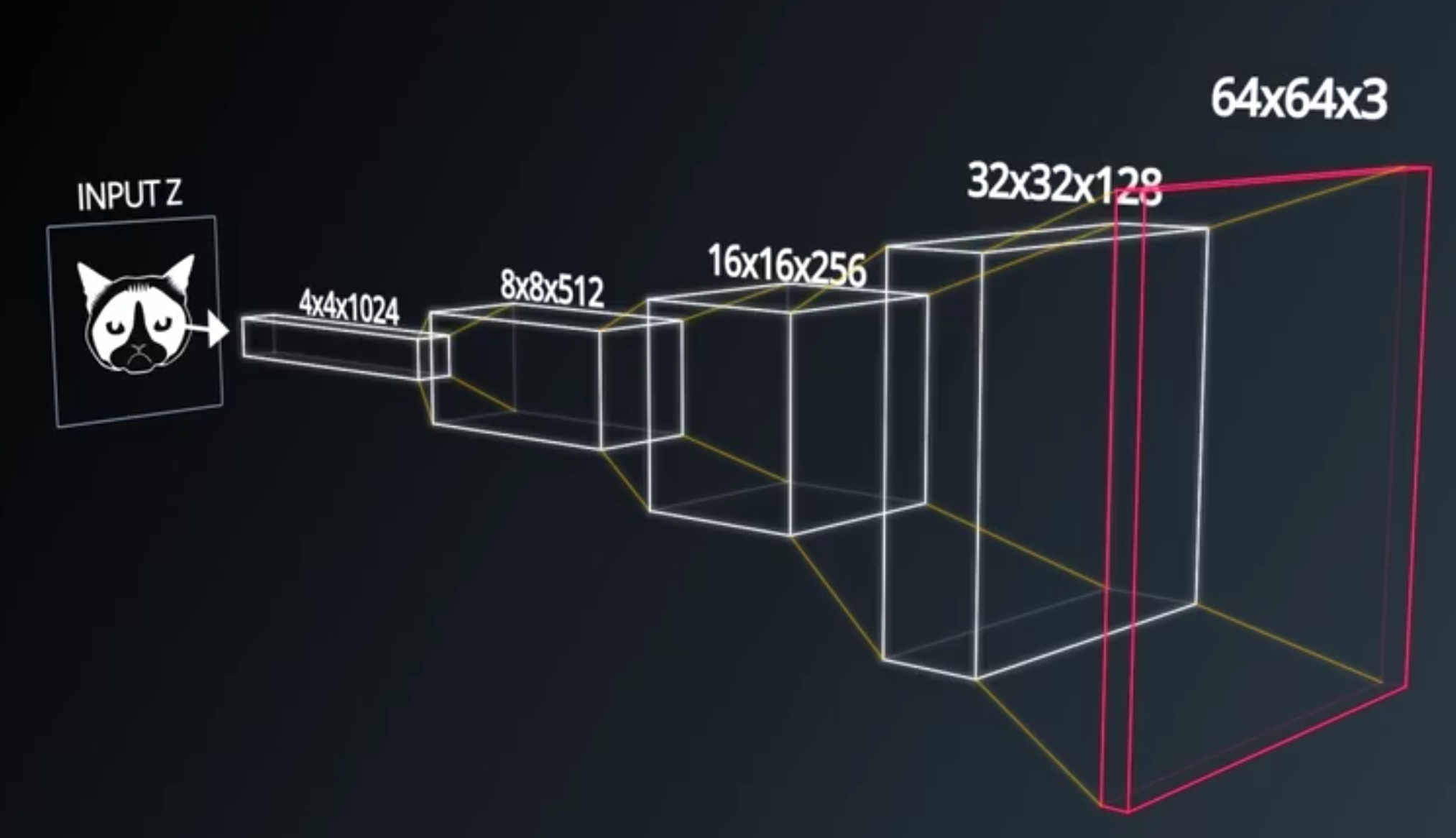

- DCGAN generator

- The first step is to connect the input vector z to a fully connected layer

- then reshape the fully connected layer to whatever depth you have for this first layer.

- Then we build this stack of layers by upsampling with transposed convolution.

- The final layer, the output of the generator, convolutional layer.

- The size of this layer is actually whatever the size of your real images.

- There are no max pool or fully connected layers in the network, just convolutions.

- The new layer size depends on the stride.

- In the generator we can use ReLU and batch normalization activations for each layer

- Batch normalization scales the layer inputs to have a mean of and variance of .

- This helps the network train faster, and reduces problems due to poor parameter initialization.

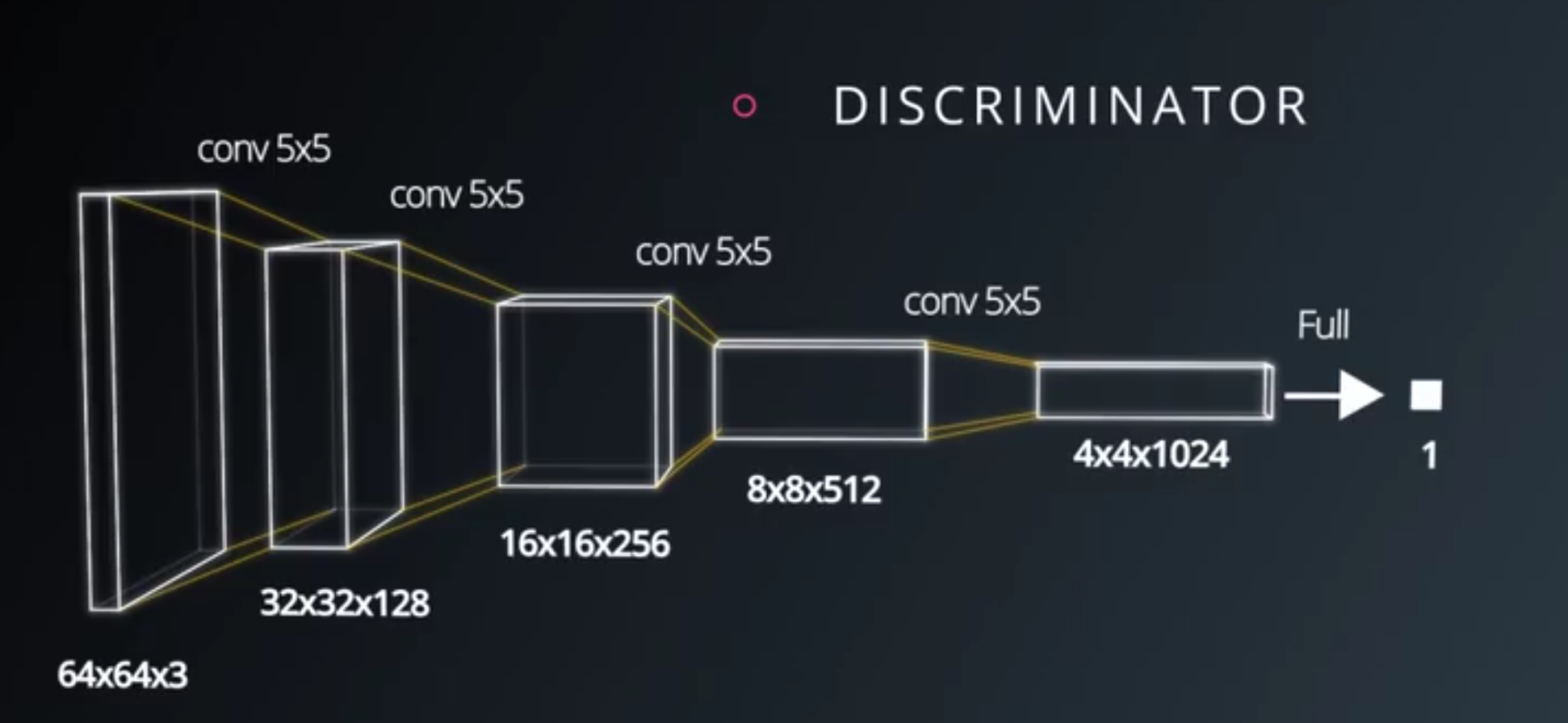

- DCGAN discriminator

- The discriminator is a convolutional network

- with one fully connected layer at the end, used as the sigmoid output.

- there are no max pool layers.

- The downsampling is done completely with the strided convolutional layers.

- The final convolutional layer is flattened, then connected to a single sigmoid unit.

- Again, the layers have leaky ReLU activations and batch normalization on the inputs.

- The discriminator is a convolutional network

Batch Normalization

- Batch normalization is a

- technique for improving the performance and stability of neural networks.

- The idea is

- to normalize the layer inputs such that they have a mean of zero and variance of one, much like how we standardize the inputs to networks.

- Batch normalization is necessary to make DCGANs work.

- Scratch from Jupyter notebook